Researchers from Tsinghua University Unveil ‘Gemini’: A New AI Approach to Boost Performance and Energy Efficiency in Chiplet-Based Deep Neural Network Accelerators

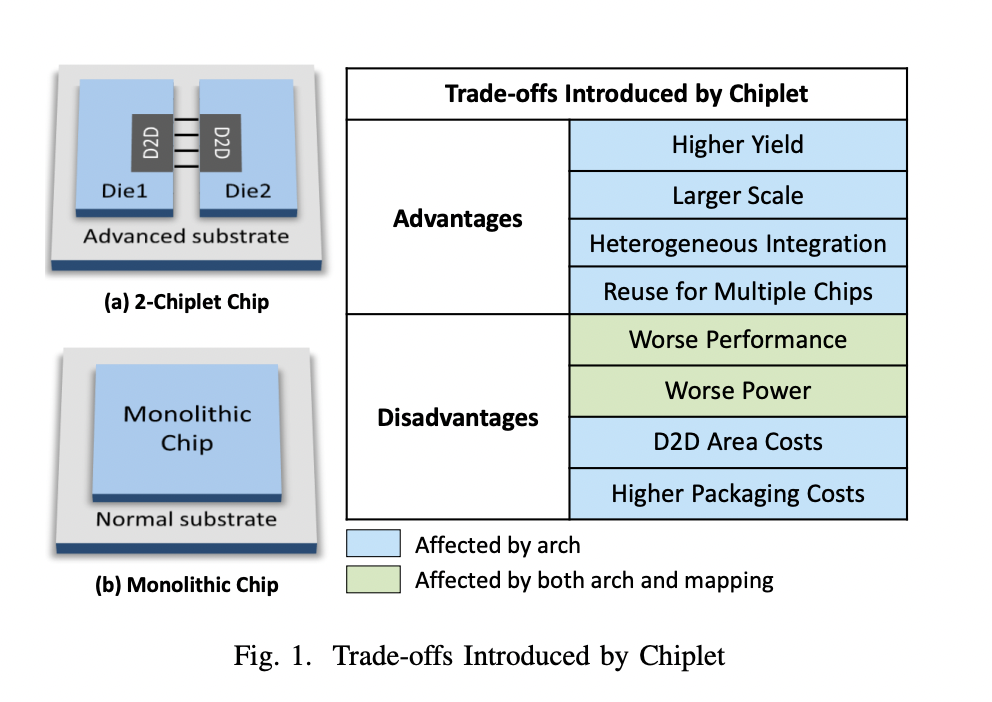

Researchers from multiple universities have addressed the challenge of designing large-scale DNN chiplet accelerators, focusing on optimizing monetary cost (MC), performance, and energy efficiency. The complexity arises from the interplay of various parameters, including network-on-chip (NoC) communication, core positions, and different DNN attributes. It is crucial to explore a vast design space for effective solutions.

Currently, existing DNN accelerators need help in achieving an optimal balance between MC, performance, and energy efficiency. They introduced the architecture and mapping co-exploration framework for DNN chiplet accelerators, Gemini. Gemini employs a novel encoding method to define low-power (LP) spatial mapping schemes, allowing for an exhaustive exploration of hidden optimization opportunities. The framework utilizes a dynamic programming-based graph partition algorithm and a Simulated-Annealing-based (SA-based) approach for optimization.

Gemini’s mapping component uses the SA algorithm with five operators tailored to efficiently explore the LP spatial mapping space. These operators include modifying partition attributes, swapping cores within computational groups (CG), and adjusting DRAM-related attributes. The framework dynamically optimizes data transmission, intra-core dataflow, and D2D link communication, contributing to enhanced performance and energy efficiency. The evaluation process involves assessing MC, energy consumption, and delay through an Evaluator module.

The architecture aspect of Gemini provides a highly configurable hardware template, enabling precise evaluations for performance, energy, and MC. The proposed framework’s experiments showcase that the explored architecture and mapping scheme outperforms existing state-of-the-art (SOTA) designs like Simba with Tangram mapping. Gemini also achieves significant improvements with only a marginal increase in MC, demonstrating its effectiveness in co-exploring the architecture and mapping space.

In conclusion, the Gemini framework offers a comprehensive solution to the intricate challenges of designing DNN chiplet accelerators. The experiments not only validate Gemini’s effectiveness but also shed light on the potential benefits of chiplet technology in architecture design. Overall, Gemini stands out as a valuable tool for researchers and practitioners aiming to design high-performance and energy-efficient DNN accelerators.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our 35k+ ML SubReddit, 41k+ Facebook Community, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Pragati Jhunjhunwala is a consulting intern at MarktechPost. She is currently pursuing her B.Tech from the Indian Institute of Technology(IIT), Kharagpur. She is a tech enthusiast and has a keen interest in the scope of software and data science applications. She is always reading about the developments in different field of AI and ML.