How Google DeepMind’s AI Bypasses Traditional Limits: The Power of Chain-of-Thought Decoding Explained!

In the rapidly evolving field of artificial intelligence, the quest for enhancing the reasoning capabilities of large language models (LLMs) has led to groundbreaking methodologies that push the boundaries of what machines can understand and solve. Traditionally, applying LLMs to complex reasoning tasks has depended heavily on the craft of prompting, requiring models to follow specific instructions or logic patterns outlined by humans. This approach, while effective, comes with its limitations, necessitating a manual and intricate process that often restricts the model’s natural reasoning abilities.

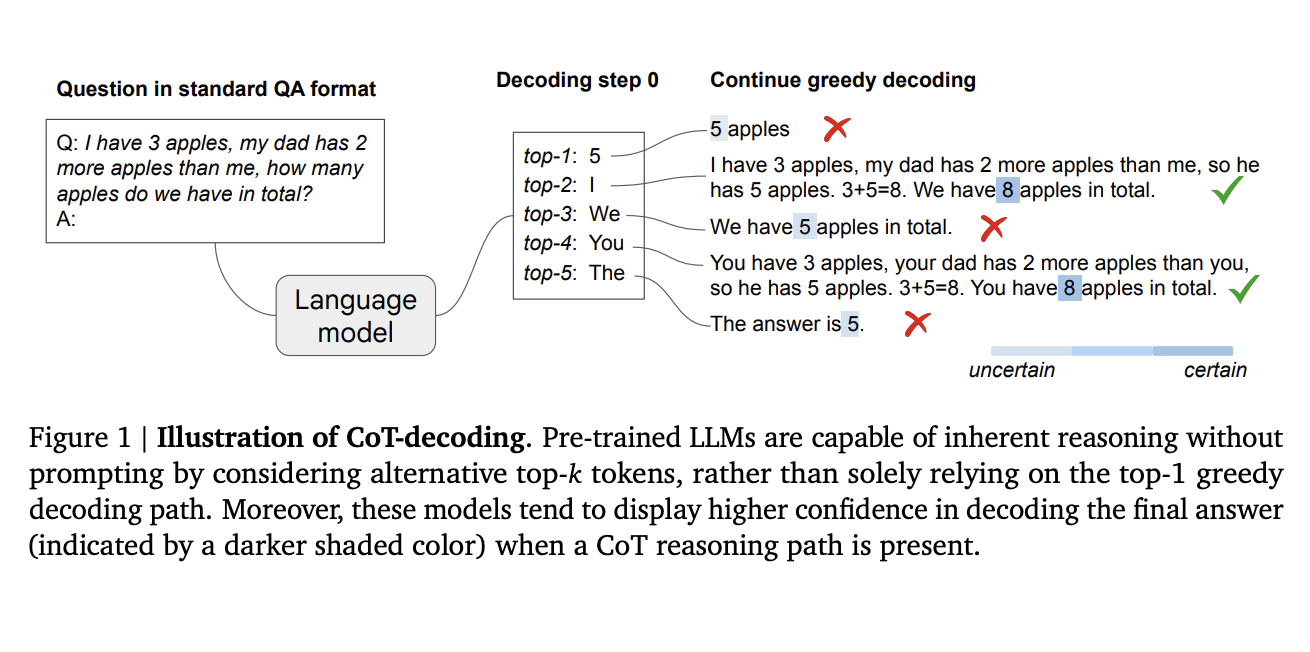

Researchers from Google DeepMind have embarked on an exploratory journey that challenges the conventional reliance on prompting techniques. Their study introduces an innovative method known as Chain-of-Thought (CoT) decoding, which seeks to harness the inherent reasoning capabilities embedded within pre-trained LLMs. This novel approach diverges from the traditional path by proposing an alternative decoding strategy that does not depend on external prompts to elicit reasoning processes. Instead, it explores the rich tapestry of potential outcomes encoded in the model’s parameters, uncovering latent reasoning paths that lead to logical conclusions.

The crux of CoT decoding lies in its ability to navigate through the model’s vast knowledge base, selecting paths less traveled to reveal hidden reasoning sequences. By inspecting alternative top-k tokens during the decoding process, the researchers discovered that LLMs could naturally generate coherent and logical chains of thought akin to a human’s problem-solving process. This method significantly reduces the manual labor involved in prompt engineering and allows models to reason autonomously across a broader spectrum of tasks.

Empirical evidence from the study underscores the efficacy of CoT decoding, demonstrating its superior performance over standard greedy decoding methods. The experiments conducted by the DeepMind team reveal that this innovative decoding approach not only enhances the model’s reasoning capabilities but also instills a higher confidence level in the answers generated. For instance, in mathematical reasoning tasks such as the Grade-School Math (GSM8K) benchmark, CoT decoding achieved a remarkable +26.7% absolute accuracy improvement over traditional methods when applied to the PaLM-2 Large model. This leap in performance highlights the potential of leveraging inherent reasoning paths within LLMs to solve complex problems more effectively.

The implications of this research extend far beyond the realms of academic curiosity. The DeepMind team’s work paves the way for developing more autonomous and versatile AI systems by demonstrating that LLMs possess intrinsic reasoning capabilities that can be elicited without explicit prompting. These systems could tackle various reasoning tasks, from solving intricate mathematical problems to navigating the nuances of natural language reasoning, without needing labor-intensive prompt engineering.

In conclusion, the exploration of Chain-of-Thought decoding by Google DeepMind represents a paradigm shift in our approach to enhancing the reasoning abilities of large language models. This research challenges the status quo and offers a glimpse into a future where machines can independently reason and solve complex tasks, marking a significant milestone in creating more intelligent and autonomous artificial intelligence systems.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and Google News. Join our 37k+ ML SubReddit, 41k+ Facebook Community, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our Telegram Channel

![]()

Muhammad Athar Ganaie, a consulting intern at MarktechPost, is a proponet of Efficient Deep Learning, with a focus on Sparse Training. Pursuing an M.Sc. in Electrical Engineering, specializing in Software Engineering, he blends advanced technical knowledge with practical applications. His current endeavor is his thesis on “Improving Efficiency in Deep Reinforcement Learning,” showcasing his commitment to enhancing AI’s capabilities. Athar’s work stands at the intersection “Sparse Training in DNN’s” and “Deep Reinforcemnt Learning”.