OpenAI Enhances Language Models with Fill-in-the-Middle Training: A Path to Advanced Infilling Capabilities

Transformer-based language models, like BERT and T5, are adept at various tasks but struggle with infilling—generating text within a specific location while considering both preceding and succeeding contexts. Though encoder-decoder models can handle suffixes, their training data typically includes shorter infill regions than practical ones. However, causal decoder-based models, such as GPT-3 and its successors, excel in open-ended text generation and in-context learning without task-specific finetuning. Despite their limitations in infilling, these models find extensive use in applications like coding assistance for tasks such as docstring or import statement generation due to their architectural simplicity and efficiency.

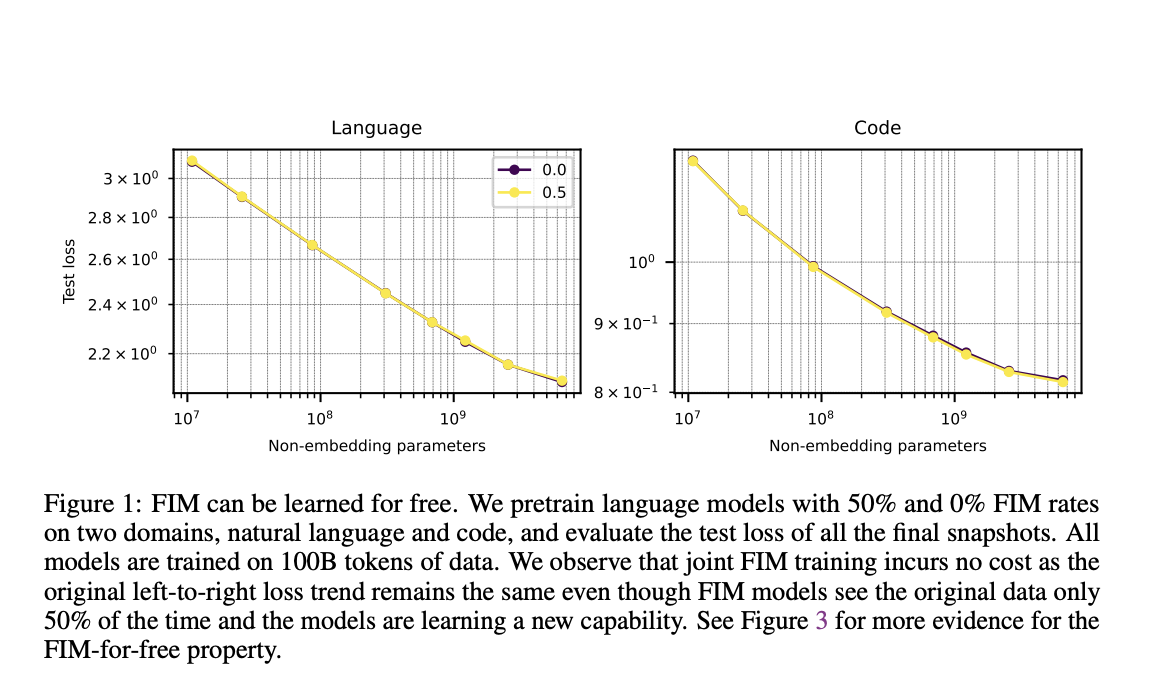

OpenAI researchers demonstrate that autoregressive language models can effectively learn to infill text by shifting a portion from the middle to the end of documents. This process, known as “fill-in-the-middle” (FIM), doesn’t compromise the models’ original left-to-right generative capability. Conducting thorough evaluations, they advocate for training future language models with FIM as a default approach, citing its usefulness, simplicity, and efficiency. They establish best practices for FIM model training through experiments on various hyperparameters. This advancement addresses a key limitation in large-scale language modeling, particularly causal decoder-based models, without altering their architecture.

Text infilling is vital in language models, where masked tokens represent regions to fill. While early models like BERT masked tokens randomly, later ones like T5 and BART showed improvements with contiguous masking. XLNet and others allow flexible token generation orders. Models like InCoder adopt left-to-right autoregressive modeling with infill regions moved to the end. The researchers focus on single-span infilling for practicality and emphasize computational efficiency. Infilling can be done via architectures like SpanBERT or through data formatting. Their work and others contribute to infilling advancements, with notable systems like code-davinci-002 demonstrating strong infilling capabilities.

The study mentions a data augmentation technique called FIM, where a span of text from the middle of a document is moved to its end. It demonstrates that training autoregressive language models with FIM doesn’t compromise their left-to-right capability, establishing an FIM-for-free property. The study identifies best practices for FIM training through extensive experiments and ablations. It also highlights the inefficiency of finetuning with FIM compared to pretraining. New infilling benchmarks are also mentioned, and the importance of sampling evaluations over test losses in assessing FIM models is emphasized. The study confirms that FIM models effectively learn to infill text.

Document-level FIM, where documents are split into prefixes, middle, and suffixes, the effective FIM rate can decrease due to how documents are chunked for training. If most documents are longer than the model’s context size, it’s unlikely that all parts of a single document will appear together in a context, leading to a lower effective FIM rate. On the other hand, context-level FIM avoids this issue by applying FIM after chunking, ensuring that each context contains complete examples of prefixes, middle, and suffixes. Consequently, context-level FIM often outperforms document-level FIM due to its higher effective FIM rate, providing more consistent and effective training examples for the model.

In the study, researchers demonstrate that causal decoder-based language models, trained on a combination of left-to-right and FIM-transformed data, can effectively fill in missing sections of documents. FIM models surpass traditional models in versatility, and they are capable of tasks like importing modules and completing functions. They introduce the concept of FIM-for-free, showing that FIM models achieve comparable test loss to left-to-right models while exhibiting lower FIM loss. They recommend character-level FIM with random spans for optimal performance, propose future research directions to enhance infilling capabilities and explore the broader potential of joint learning in language models.

Check out the Paper and Github. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 39k+ ML SubReddit

![]()

Sana Hassan, a consulting intern at Marktechpost and dual-degree student at IIT Madras, is passionate about applying technology and AI to address real-world challenges. With a keen interest in solving practical problems, he brings a fresh perspective to the intersection of AI and real-life solutions.