Microsoft Research Introduces ‘MEGAVERSE’ for Benchmarking Large Language Models Across Languages, Modalities, Models, and Tasks

On many tasks and benchmarks, Large Language Models (LLMs) have outperformed earlier generations of language models, and on occasion, they have even come close to matching or surpassing human performance. While some models may seem to have impressive skills, it is not always easy to tell if that is due to enhanced model capabilities or something else entirely, such as contamination in test datasets or a lack of datasets that accurately assess their abilities. Because of this, research into determining LLMs has grown in stature.

Most studies that have attempted to assess LLMs, whether through human review, qualitative tests for specific competencies, or benchmarking, have primarily focused on the English language. This research has uncovered a significant disparity in the proficiency of LLMs in English compared to other languages. However, evaluating LLMs in languages other than English poses numerous challenges, including the scarcity of multilingual benchmarks for reasoning, conversation, and dialogue across various language families.

The findings from earlier studies on MEGA provide valuable insights into the multilingual capabilities of LLMs. Compared to state-of-the-art (SOTA) tuned language models like TULRv6, GPT-4 demonstrates commendable performance. However, it’s important to note that GPT models exhibit lower performance, particularly those designed for low-resource languages and languages written in scripts other than Latin.

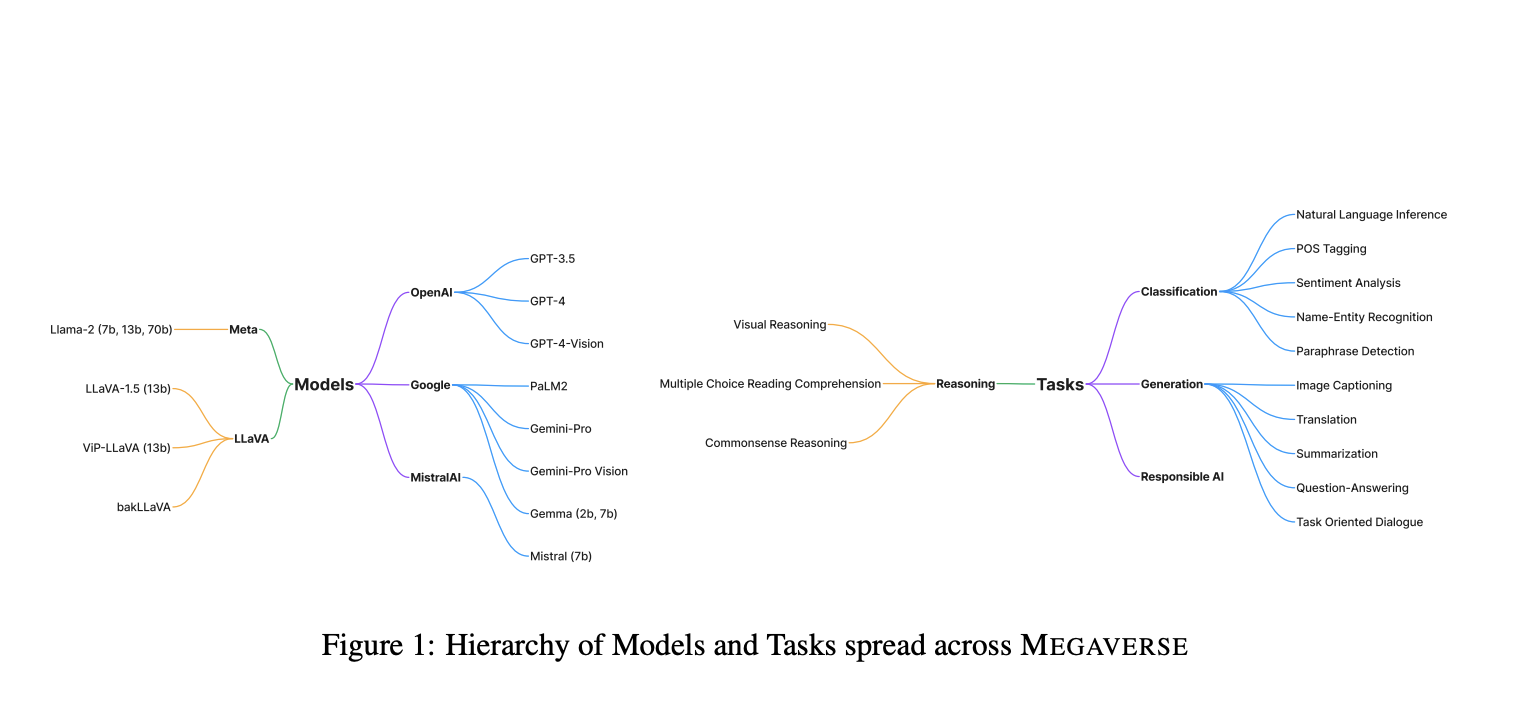

Researchers from Microsoft Corporation expanded coverage to 22 datasets and 83 languages, including many low-resource African languages, by building on the MEGA benchmark and adding 6 new datasets.

This work provides valuable insights for developers and researchers. In particular, the team found that bigger commercial models like GPT-4 and Gemini-pro perform better than smaller ones like Gemma, Llama, and Mistral on low-resource languages. This pattern holds across most of the datasets examined, indicating that smaller models have difficulty with multilingual performance. This suggests that approaches like fine-tuning, language family-based models, and language-specific models should be investigated further to improve multilingual performance.

Regarding the multimodal datasets, GPT-4-Vision performed better than LLaVA and Gemini-Pro-Vision. The efficiency of the Language Model is related to the fertility of tokenizers. The work also depicts the fertility analysis of each tokenizer, suggesting that tokenizer fertility was lower for Latin script languages like English and Spanish than for morphologically complicated languages like Telugu, Malay, and Malayalam.

Due to computational and time constraints, the researchers highlight that they could only conduct the contamination research on 7B variations of their open-source models and not all datasets. Further, dataset contamination is a major problem with benchmarking studies conducted in languages other than English. According to their contamination analysis on commercial and free source models, almost all models use MEGAVERSE datasets. It is important to avoid including newly created multilingual evaluation datasets in LLM training data because of the difficulty in doing so owing to financial and resource limitations. To accomplish this, the team aims to improve its capacity to detect contamination and implement safeguards to prevent it from happening again in the future.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

Want to get in front of 1.5 Million AI Audience? Work with us here

Dhanshree Shenwai is a Computer Science Engineer and has a good experience in FinTech companies covering Financial, Cards & Payments and Banking domain with keen interest in applications of AI. She is enthusiastic about exploring new technologies and advancements in today’s evolving world making everyone’s life easy.