Google AI Introduces an Open Source Machine Learning Library for Auditing Differential Privacy Guarantees with only Black-Box Access to a Mechanism

Google researchers address the challenge of maintaining the correctness of differentially private (DP) mechanisms by introducing a large-scale library for auditing differential privacy, DP-Auditorium. Differential privacy is essential for protecting data privacy with upcoming regulations and increased awareness of data privacy issues. Verifying a mechanism for its ability to uphold differential privacy in a complex and diverse system is a difficult task.

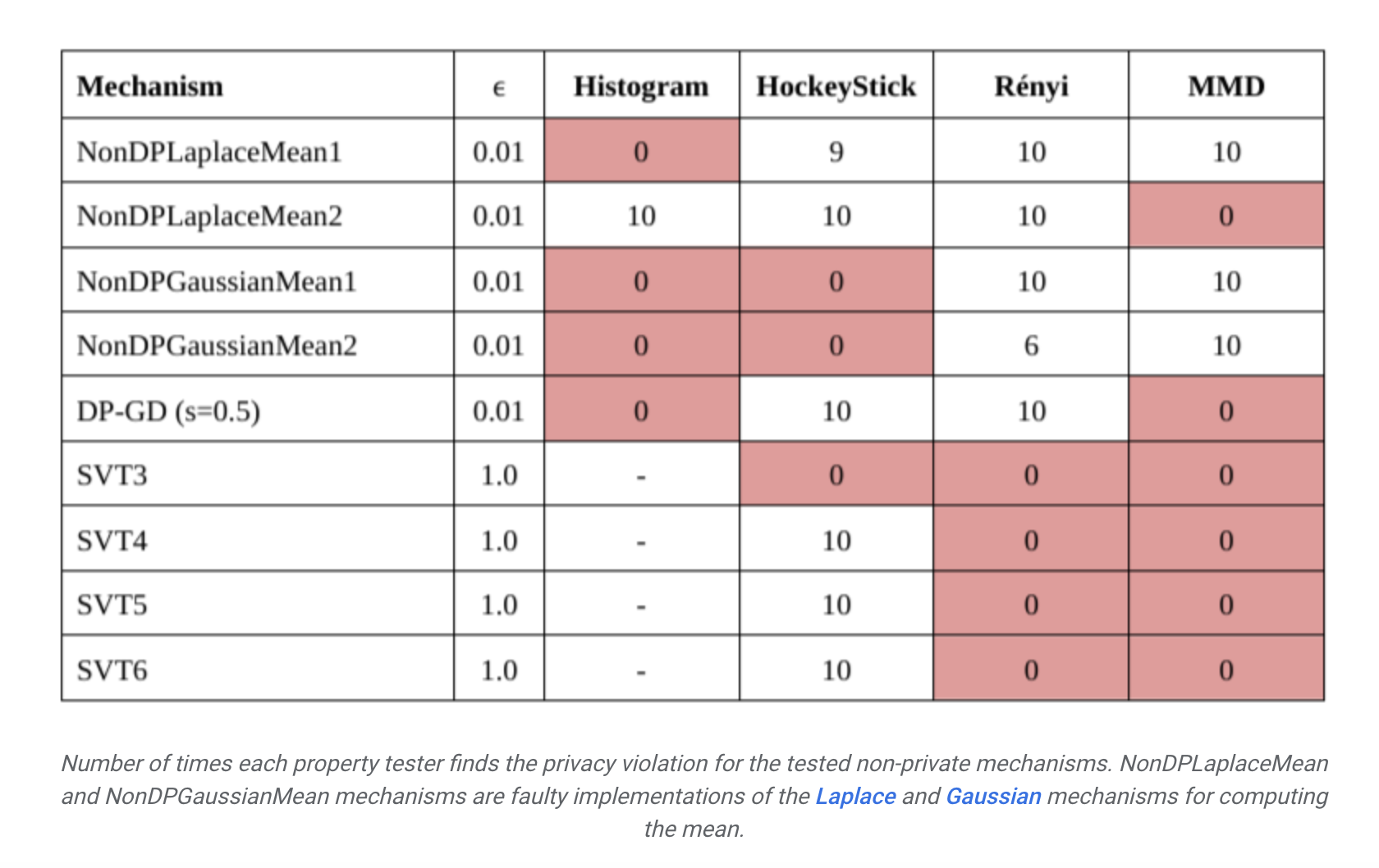

Existing techniques have proven to be working but are unable to unify frameworks for comprehensive and systematic evaluation. For complex settings, the verifying techniques are required to be more flexible and extendable tools. The proposed model is designed to test differential privacy by using only black-box access. DP-Auditorium abstracts the testing process into two main steps: measuring the distance between output distributions and finding neighboring datasets that maximize this distance. It utilizes a set of function-based testers which is more flexible than traditional histogram-based methods.

DP-Auditorium’s testing framework focuses on estimating divergences between output distributions of a mechanism on neighboring datasets. The library implements various algorithms for estimating these divergences, including histogram-based methods and dual divergence techniques. By leveraging variational representations and Bayesian optimization, DP-Auditorium achieves improved performance and scalability, enabling the detection of privacy violations across different types of mechanisms and privacy definitions. Experimental results demonstrate the effectiveness of DP-Auditorium in detecting various bugs and its ability to handle different privacy regimes and sample sizes.

In conclusion, DP-Auditorium proved to be a comprehensive and flexible tool for testing differential privacy mechanisms, which successfully addresses the need for assured and stable auditing with increasing data privacy concerns. The abstracting mechanism for the testing process and incorporating novel algorithms and techniques, the model enhances confidence in data privacy protection efforts.

Check out the Paper and Blog. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and Google News. Join our 38k+ ML SubReddit, 41k+ Facebook Community, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our Telegram Channel

You may also like our FREE AI Courses….

Pragati Jhunjhunwala is a consulting intern at MarktechPost. She is currently pursuing her B.Tech from the Indian Institute of Technology(IIT), Kharagpur. She is a tech enthusiast and has a keen interest in the scope of software and data science applications. She is always reading about the developments in different field of AI and ML.