This AI Paper from China Introduces MiniCPM: Introducing Innovative Small Language Models Through Scalable Training Approaches

Developing Large Language Models (LLMs) with trillions of parameters is costly and resource-intensive, prompting interest in exploring Small Language Models (SLMs) as a more efficient option. Despite their potential, LLMs pose challenges due to their immense training costs and operational inefficiencies. Understanding their training mechanisms is elusive, making experiments prohibitively expensive. Also, deploying such large models on devices like PCs or smartphones is often impractical or inefficient.

Recent interest in SLMs has led to the emergence of innovative models like the Phi series, TinyLlama, MobileLLM, and Gemma. While these models have enriched the SLM field, they still struggle in two key areas: replicating the comprehensive abilities of LLMs and establishing transparent, scalable training methods beneficial for both SLMs and LLMs’ advancement.

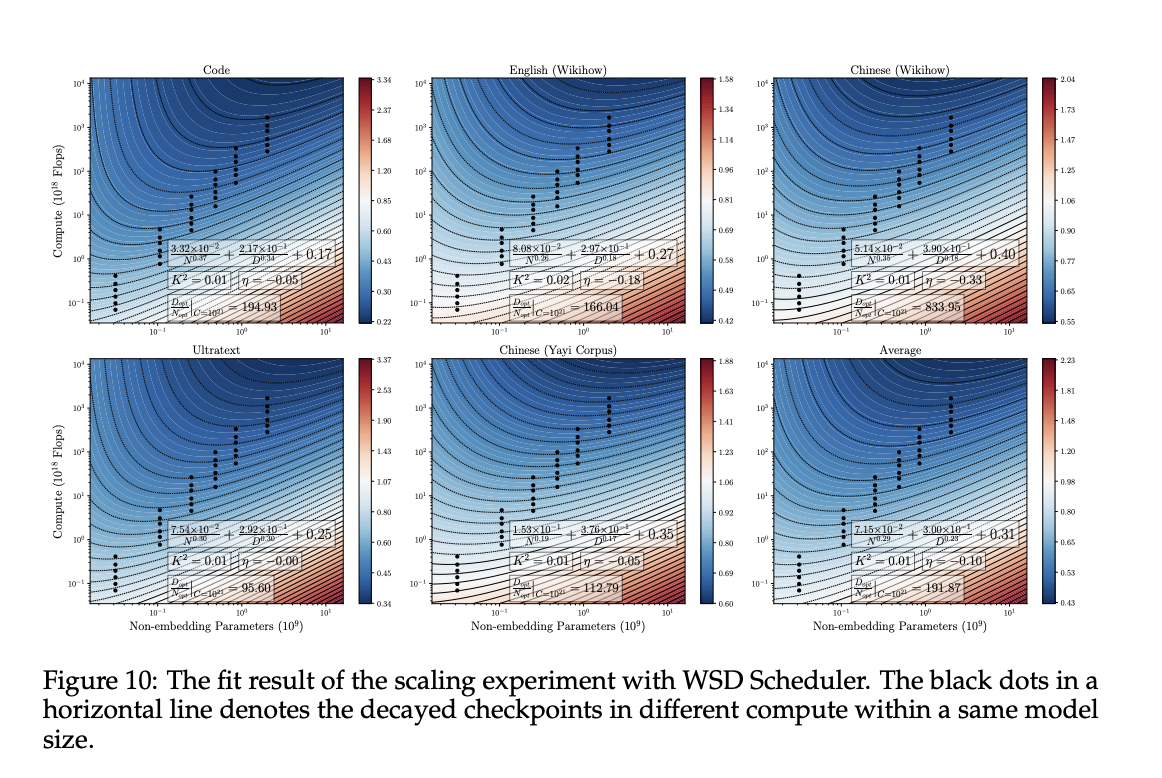

The researchers from the Department of Computer Science and Technology, Tsinghua University, and Modelbest Inc. introduce MiniCPM, comprising 1.2B and 2.4B non-embedding parameter variants, which rival 7B-13B LLMs in performance while focusing on SLMs. Their approach emphasizes scalability in model and data dimensions for future LLM research. They utilize extensive model wind tunnel experiments for stable scaling and introduce a Warmup-Stable-Decay (WSD) learning rate scheduler for data scaling, facilitating continuous training and domain adaptation. This method enables efficient study of the data-model scaling law and introduces variants like MiniCPM-DPO, MiniCPM-MoE, and MiniCPM-128K.

The Cosine Learning Rate Scheduler (LRS) is vital for adjusting learning rates during training. It gradually reduces the learning rate following a cosine curve after a warmup stage, with a key parameter T indicating when the decrease first reaches the minimum. Setting T equal to the total training steps S isn’t optimal; both T < S and T > S yield suboptimal results. Cosine LRS performs best when T = S due to longer high learning rate training and thorough decay phases, aiding in finding global and local optima. Instead of Cosine LRS, the Warmup-Stable-Decay (WSD) LRS is proposed, dividing training into warmup, stable, and decay stages to enhance performance.

Observations show that, on average, MiniCPM-2.4B ranks highest among SLMs. It performs similarly to Mistral-7B-v0.1 in English but surpasses it significantly in Chinese. MiniCPM-2.4B outperforms Llama2-13B in most areas except MMLU, BBH, and HellaSwag, while MiniCPM-1.2B outperforms Llama2-7B except in HellaSwag. Generally, BBH poses more difficulty for SLMs than LLMs in knowledge-oriented datasets, suggesting reasoning ability’s reliance on model size over knowledge. Phi-2 matches MiniCPM’s performance on academic datasets, possibly due to their emphasis on educational contexts in training data.

In conclusion, This paper introduces MiniCPM, featuring two SLMs with 2.4B and 1.2B non-embedding parameters, respectively, outperforming larger models. Their scalable training methodologies show promise for both model and data size, with inspiring potential applications in LLM development. The WSD scheduler enhances continuous training and facilitates efficient scaling law study. The MiniCPM family, including DPO, long context, and MoE versions, is introduced, with future directions aiming to analyze loss decrease in the decay stage and enhance MiniCPM’s capability through scaling in model and data size.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

Want to get in front of 1.5 Million AI Audience? Work with us here

![]()

Asjad is an intern consultant at Marktechpost. He is persuing B.Tech in mechanical engineering at the Indian Institute of Technology, Kharagpur. Asjad is a Machine learning and deep learning enthusiast who is always researching the applications of machine learning in healthcare.